- Overview

- Transcript

3.1 Next Steps

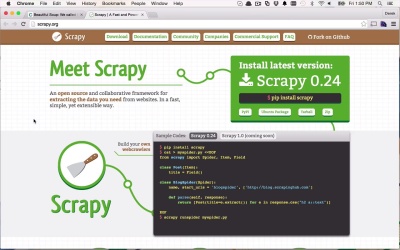

Now that you understand the basics of crawling and scraping with Python, why not use some common tools and libraries that already exist to make the job even easier? I recommend checking out Beautiful Soup and Scrapy to make your application more robust and easier to maintain.

Related Links

1.Introduction2 lessons, 14:16

1.1Introduction05:42

1.2Getting Set Up08:34

2.Intro to Crawling and Scraping4 lessons, 38:52

2.1Where to Find the Data06:05

2.2Creating the Code Structure07:51

2.3Beginning to Extract Data13:06

2.4Crawling to Other Pages11:50

3.Conclusion1 lesson, 02:06

3.1Next Steps02:06

3.1 Next Steps

Finally I'd like to show you a couple different options you have for actually retrieving additional data, in a more structured way and maybe in a simpler way than just using XPath. And there's a couple different libraries and things out there that will definitely make this easier for you. And one of those is Beautiful Soup. Very interesting name but it's a very nice library, that allows you to actually kinda parse through the HTML or XML that you get from web sites, or from any sort of source actually. And really works well and allows you to traverse the trees very easily. And Beautiful Soup, allows you to use different functions that are built in, like children, and parent, and descendants, and stuff like that. So you don't have to worry about writing those XPath query strings. You can use functions to actually do the same thing. Which can definitely make things a little bit to easier to read, I think. If you're unfamiliar with XPath. So I would definitely take a look at Beautiful Soup as well as Scrapy. This is a very interesting open source library. So you can check out the source code and really learn about what a very robust. Scraper is all about, and you can go ahead and download the source code and play around with it, look through the documentation. Both of these sites, Beautiful Soup and Scrapy, both have pretty good documentation to take you through, some ways of making these things even easier. And are a little bit more robust than what you might throw together on an afternoon or on an evening. But this is definitely something that if you're interested in, extracting data from sites and kind of putting them all together in a centralized location. And doing something with, just like I was in a recent project, this might be an interesting way for you to at least get started, and find out some more interesting information. So I hope you have enjoyed this course, and not only learning a little bit about crawling and scraping, but a little bit about how easy it is to do with Python, with very little effort. And then, hopefully, as we continue to increase the amount of Python content on Tuts+, you'll continue to learn more. Can't wait to see you next time, thanks.