- Overview

- Transcript

1.2 Getting Set Up

1.Introduction2 lessons, 14:16

1.1Introduction05:42

1.2Getting Set Up08:34

2.Intro to Crawling and Scraping4 lessons, 38:52

2.1Where to Find the Data06:05

2.2Creating the Code Structure07:51

2.3Beginning to Extract Data13:06

2.4Crawling to Other Pages11:50

3.Conclusion1 lesson, 02:06

3.1Next Steps02:06

1.2 Getting Set Up

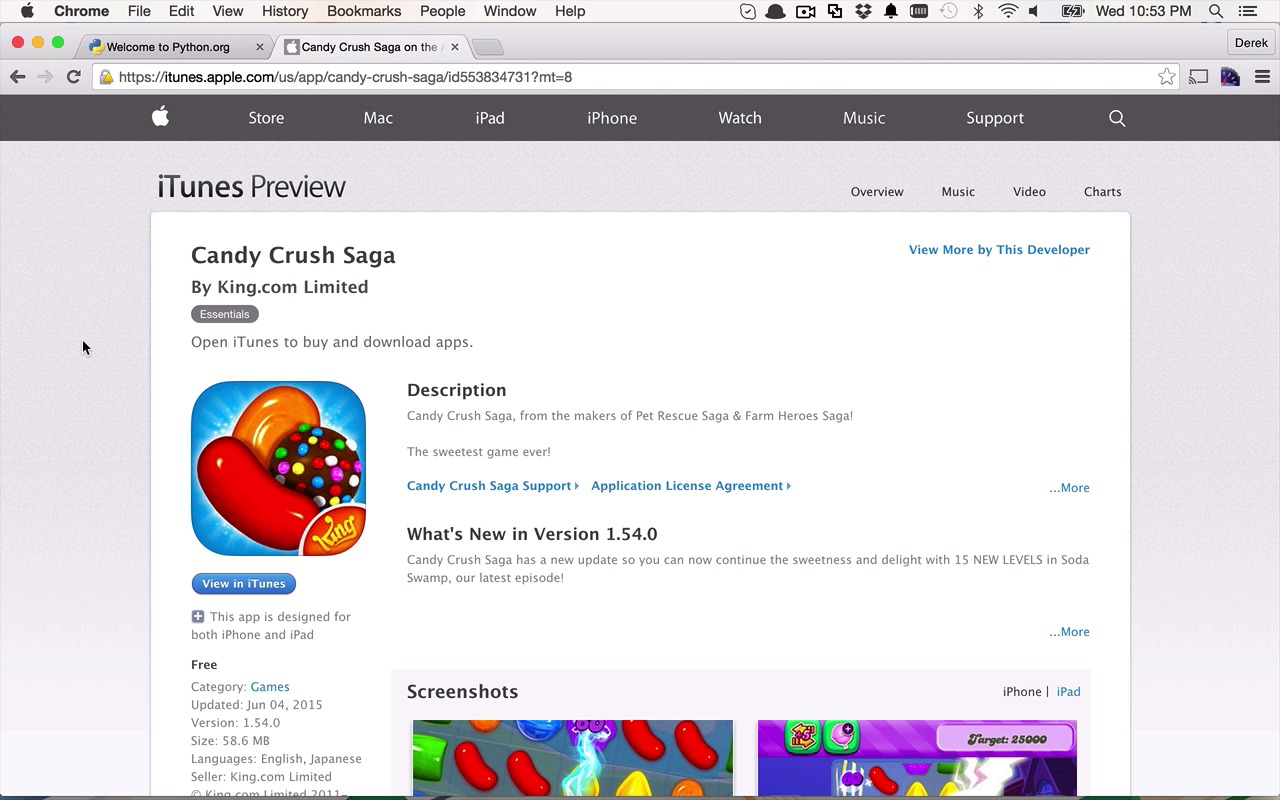

So as I mentioned before for this course, we're going to be using Python to ultimately go out and navigate through the web a little bit, starting in a particular URL that we want to discuss here in just a few moments and ultimately extract some data from either that page that we're starting on and also other pages that we can then crawl to. That's where the whole crawling and scraping concept comes from, but before we can do that, we actually have to have the correct tools installed. We're going to start with Python, obviously, because that's going to be our programming language of choice. And it's quite simple to get this. Depending on the platform you're running on, you may have to download it if it's not already installed. And that's typically going to come from Windows Out of the box Windows doesn't always necessarily come with some sort of Python installed. It's quite easy to get it. You can just navigate to python.org and go to the downloads section and select your platform and operating system of choice, as well as the version that you would like to download. Now for this particular course I'm gonna be using the 2.7 but you could probably follow along with very little modification using a number of the 3.x versions of Python. Now if you're using say a Mac or Linux then odds are you may have some version of Python installed, and once again Simply to verify that, you can simply type in the command python-V and that's going to give you the version and as I said before, I'm using the 2.7 version, but you could probably follow along with the 3.0 version. Next, I would like to talk a couple of different things that we're going to need. We're going to need a simple way to parse XML or HTML or what have you, as we're working with the content's that coming from the web. But we're gonna talk about that a little bit more in an upcoming lesson, but it would be much easier if we had some easy way to parse out some data without having to do it manually. And we're gonna use a library for that. A prebuilt library that we can download it and install, but its very important when you start using new programming languages or platforms, there's typically a way to structurally download and install packages or dependencies that you have for your different tasks that you want to do, and Python is no different. In Python, we have something called Pip. Now, Pip is really joust a package manager for Python, and if you head over to pypi.python.org, and you can go a little bit deeper in here, and search around and you're going to find Pip, and you're gonna be able to read a little bit more about it, but ultimately you're gonna wanna learn about the installation. And it's actually quite easy. You can install, you can download this Python file right here and then simply execute Python and then get pip.py and it's going to install Pip for you. So once you have that, it makes your world so much easier to download and install different libraries. And before I was referencing and xml library to help us parse through some data, and that particular library that we're going to use in this particular course is called LXML. It's a very nice, lightweight library that's going to make these tasks quite easy. In order to actually take care of the downloaded installation of that, we want to go over to a command shot and once we've downloaded and installed Pip, as we saw in a previous step, I can simply type in the word Pip Install and then specify what name of a library I want to install. In this case I want to install LXML. Now it's gonna go out and check and it's gonna say well the requirement's already satisfied because I have already installed it. But odds are if you don't have it installed it's gonna go through the process of downloading it and installing it for you. So once you've gotten through this step, you should have LXML installed and then the next thing we want to do is we can definitely write some HTTP request code, and use some low level libraries that come built in with Python. But once again, there are libraries out there that make that much easier for us and the one that I'm gonna be using, specifically for this, is called Requests. And Requests is just a nice, little high level library that's gonna make it very easy for us to issue different HTTP requests, like gets and posts and puts and deletes. And as you can see here the code is quite simple. We simply use the Requests class and we just use the methods: get, post, put, delete, what have you and then pass, obviously, some other parameters should we need to. So once again to install that, we can use Pip again. I could say, pip Install requests, and once I already have this installed, but as you can see here, Pip will make that very easy for you to ultimately install and get ready. If you wanted to kind of test that out and see if that's actually working, what you can do is you can enter in the Python command shell or the interpreter. I can just type in Python and now I'm able to just type in commands and I can start to say that I want to import certain things. I can say from XML, I want to import HTML. That executes correctly. So that means, yes, I truly do have LXML installed, just like I would imagine I would want to and I can do the same thing for requests. I can say import requests, and that is going to execute successfully as well. It's able to find that I not only have those things installed, but they're also accessible to me. Once I've done that, that's all great and wonderful, so I'm gonna go ahead and quit out of here. Now, what I would want to do is introduce what our target is going to be and I had a hard time coming up with a target that we could ultimately run off and get some HTTP requests too, and scrape out some data, and the reason that becomes difficult is because of this. Typically, when you're dealing with making requests out to websites, the structure of those websites can change, whether it's the CSS that's changing or the Javascript or the structure of the Dom, or what-have-you, because ultimately, when you are scraping data, you are taking a snapshot of what the underlying HTTP, or excuse me, what the underlying HTML looks like and then picking it apart based on the structure of the developers on the other end. Now, a lot of time those things change, and you may be writing your code expecting or assuming that the structure is gonna be one way and then maybe a week or two weeks or a month or two months down the road, the structure of that might change. So this is a little bit of a caveat to say scraping data is error prone. It's not an exact science and you will run into errors occasionally and you may have to make some modifications, but I think this will be enough to get you started so you can at least get these things moving and then ultimately figure out how to do this for yourself. What I chose to do is I chose to go out to the iTunes preview site and start on a particular app or on a game or something like that. In this case, I'm going to start off with Candy Crush Saga, and I want to write a Python crawler and scraper that's going to start on a particular page, start on this page, that I am specifying to my application. Then, I wanted to pull out some data. So I want to pull out the title here, Candy Crush Saga I want to pull out the developer or the author. I'll leave the description for now, I'm not too concerned about that. I want to pull out the price. Obviously in this case it's free, but other apps may not be. And then there's screen shots and some other interesting information and ultimately you get down to the bottom here. Customers Also Bought. This is a very interesting piece of information for me because if I like a particular game, if I like Candy Crush, I wanna know what other people who liked that game or have downloaded that game also like or also have downloaded, or purchased, or what have you. So I'm gonna be interested in this Customers Also Bought section. That's typically going to be what I'm going to be looking for in my app. I wanna specify a URL to start at and then I want to pull out some pieces of information and then I wanna go and find the links for the Customers Also Bought. Then I want to follow those links to those other pages and then once again pull that other information out: the name and the developer as well as the price. I think we'll be able to get started there and really allow us to start to build a very simple crawler and scraper that you can ultimately evolve into using it for your purposes to do whatever you may want. So now that we've got this all kind of setup, let's go ahead how we're actually going to structure our app to do this work for us.