- Overview

- Transcript

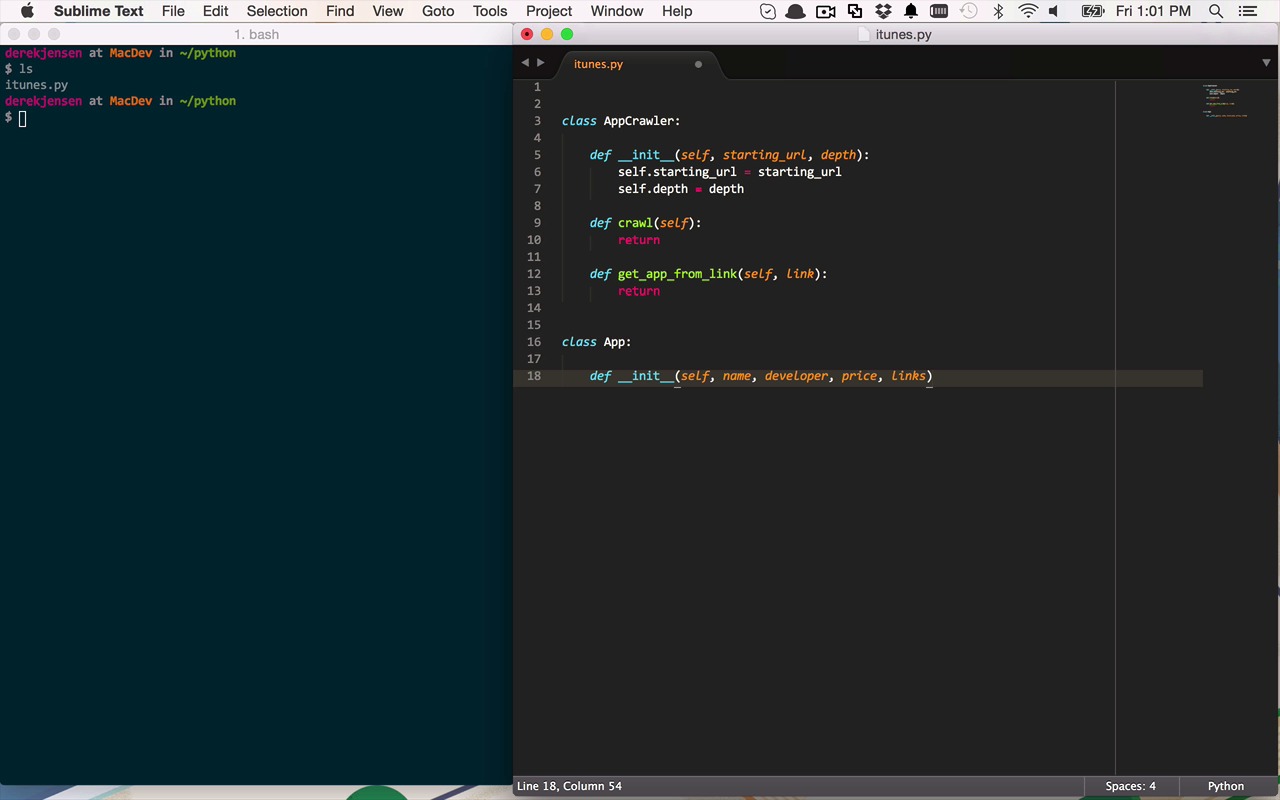

2.2 Creating the Code Structure

Just because we are coding a quick example of web crawling and scraping, it doesn’t mean we should just slop code into our editor. In this lesson we will create a basic logical structure for our app that will contain the following two classes:

-

AppCrawler—for doing the crawling -

App—to hold the scraped data

1.Introduction2 lessons, 14:16

1.1Introduction05:42

1.2Getting Set Up08:34

2.Intro to Crawling and Scraping4 lessons, 38:52

2.1Where to Find the Data06:05

2.2Creating the Code Structure07:51

2.3Beginning to Extract Data13:06

2.4Crawling to Other Pages11:50

3.Conclusion1 lesson, 02:06

3.1Next Steps02:06

2.2 Creating the Code Structure

Okay, so let's start to get down into the code. Now as you can see here I'm using Sublime Text. So I'm just using any sort of text editor that I want to use. And that's the nice thing about Python is that you can just write the code within a text file and then just run it from the command prompt. And as you can see here I've got a shell open, and all I have is one file in there called iTunes.py and here it is right here. So I've got nothing in there yet, so let's go ahead and start to change that. Let's build out the structure of this crawler before we start to actually pull out some data. We're just gonna kinda build this somewhat modularly. So, what I'm gonna do, is I am going to actually create a class. Cuz I wanna kinda keep this all together, the similar functionality all put together. So, I wanna create a class, and this is gonna be my AppCrawler. So, the whole point of this class is I wanna be able to use it to maybe give it a URL. And maybe a depth for how far and how deep I want to crawl within the links that I find on the app pages. And I'll give it those two things and then just have it kind of kick off all the logic that it's going to do. So let's go ahead and start by creating a constructor. So we're going to define an init function here. That is going to take in itself, and it's going to also take in a starting URL. And that's going to kind of be that first app that I want to provide to it, something that I might like, and that it will ultimately try to find other apps that I might be interested in. So the starting URL will be the main URL that we're gonna start with, and as I mentioned, we're also gonna pass in a depth on how deep we want to go. So let's say if I pass in a zero, then I only want to get information about the app in the URL that I'm passing in. But if I pass in a 1 and I want to go one layer deep from there and 2, two layers deep, so on and so forth. So now let's just go ahead and save these, so I'll create a starting URL property here. We'll set that to starting_url and we'll do the same with depth. And we'll add to this in a little bit. But for now that's gonna be enough to at least get us started. Then I'm envisioning us using another function that's going to be crawl. And crawl is going to take in itself, and ultimately what crawl is going to do is it's going to be the entry point into this logic. So, I'm going to create a new instance of my app crawler. And then I'm going to run my crawl method. And it's ultimately gonna go and do all the logic. So that we'll leave empty for now. For now we'll just return out of there. And then finally, just to kind of strip these things apart, I want to create this idea of separation, though. So there's this idea of crawling so that's going to kinda kick everything off and then I also want to be able to take each link that I find, starting with the starting URL, and pass it somewhere and get app data out. So we're going to go ahead and define another function. This one is going to be called get_app_from_link. I think that should just about do it. And we'll pass it itself and we're also gonna pass in the link that we want it to find, right. So I'm going to, each time I find a link that I wanna get data about or get the app from, I'm gonna pass that into this particular function. Once again, for now, we're just going to return nothing. So that's gonna be my class. That's gonna be the starting point of my AppCrawler class. And now I want to build this idea of an app and what it is. So there was a couple things that we had on there that we wanted to look at. We wanted the name, we wanted the developer, we wanted the price and we also wanted the links. Those are the main pieces of information that we wanted to get, that we wanted to scrape off of each of those pages. So let's go ahead and create another class, another simple class. This one's just gonna be called App and we'll do the same thing in here. We'll create an initializer. And this is going to be init. And its going to once again have a self. And then we're going to pass in a name. It's going to have a developer. It's going to have a price. And then it's going to have links, just like that. And then we're going to go ahead and initialize these. Name is going to be equal to name. And developer will be equal to developer. Price, as you might have guessed, will be price. And then links will be equal to links. So there you go. And then ultimately one other thing that I'm gonna want to do. Now you're not necessarily gonna have to do this for this particular project. But it's one thing that's kind of nice while you're kind of going through and learning these things is to occasionally print out some data. Now, as I mentioned in a previous lesson, one thing that would be nice to be able to would be to maybe take the information about these apps and do something with it. Maybe store it in a text file, serialize it to JSON, maybe pass it to a web service, save it in a database, run some analytics on it, whatever you want to do. All I'm really focused on in this particular course is getting the data, and kind of showing you some techniques that you can use to ultimately crawl around the web and extract some data. So, in this case, all I really wanna do is to be able to print this out to my console. And to do that, I'm simply going to create, or I'm going to define the str function here, which is ultimately going to be called every single time I try to print an instance of my App class. So all I'm going to pass in here is an instance of self and I'm going to go ahead and drop some code in here just so I can save you some time of being able to watch me type this all out. So all I'm really doing here is concatenating, making a long concatenation of the name of the app, the developer and the price. And because I'm using Python 2 there's some differences between 2 and 3 obviously and this is gonna be one of them and how it actually does the interpretation. I'm sorry, the concatenation of strings of different encodings and how you can handle that, so this is a little trick in Python 2 in case we run into some Unicode characters in either the name, the developer, or the price of these apps. This .encode, and then passing in UTF-8 will kind of make it a little bit nicer for us to be able to print out. So that's pretty much the structure here. And then finally, at the bottom of our script here, I really wanna use these things. So I'm gonna create a new instance of my crawler, and I'm gonna set that equal to AppCrawler, and I'm going to pass in the initial link that I actually want to start with. And if you recall, that was actually a link to Candy Crush Saga, so that's going to be the first URL that I'm going to navigate to, and then we're going to give it a depth. And the first thing that we're going to do, just to kind of test things out is we're gonna give it a depth of zero because we don't really care, we don't want to worry about going too deep yet, so we just want to get the data on that first layer and that's gonna be about that particular app. So once I have initialized my crawler I can then call my crawl function, which I don't have to pass anything in to. It's simply going to use the data that we've set up in the initializer to go ahead and start to crawl, and then ultimately get the app from each link, and then kind of dig down into the depths and see how far it can crawl to get a bunch of information. And then finally, I'm ultimately going to get some apps out of this whole thing. So, that's another thing that I wanna create up here. So I'm gonna say self.apps. So, I'm gonna have a list of apps that I'm ultimately gonna wanna come down here and say for app in self.app. So, I'm gonna iterate through all of those apps and then I'm just simply going to print app. So that's where this print statement is going to ultimately call this function here to display what we found on the screen. So this is the basic structure of the app. Now in the next lesson I'm going to start to introduce the concepts of how we're actually going to go and get data and bringing in some libraries and some things like that to actually help us go ahead and start to crawl and retrieve data.